- Text 1

How does latency work?

Latency refers to the time delay between when an AI system receives an input and generates the corresponding output. More specifically, latency measures the lag for a model to process inputs and execute its inference logic to produce predictions or decisions.

Latency originates from operations like data preprocessing, running mathematical computations within the model, transferring data between processing units, and postprocessing outputs. Complex models with more parameters, such as large deep learning models, typically have higher latency due to increased computational overhead.

Reducing latency requires streamlining model architecture and inference code, simplifying unnecessary operations, optimizing data transfers, leveraging model compression techniques, and using lower-precision numerical formats. Hardware improvements like dedicated AI accelerators, increased memory bandwidth, and parallelization also decrease inference latency.

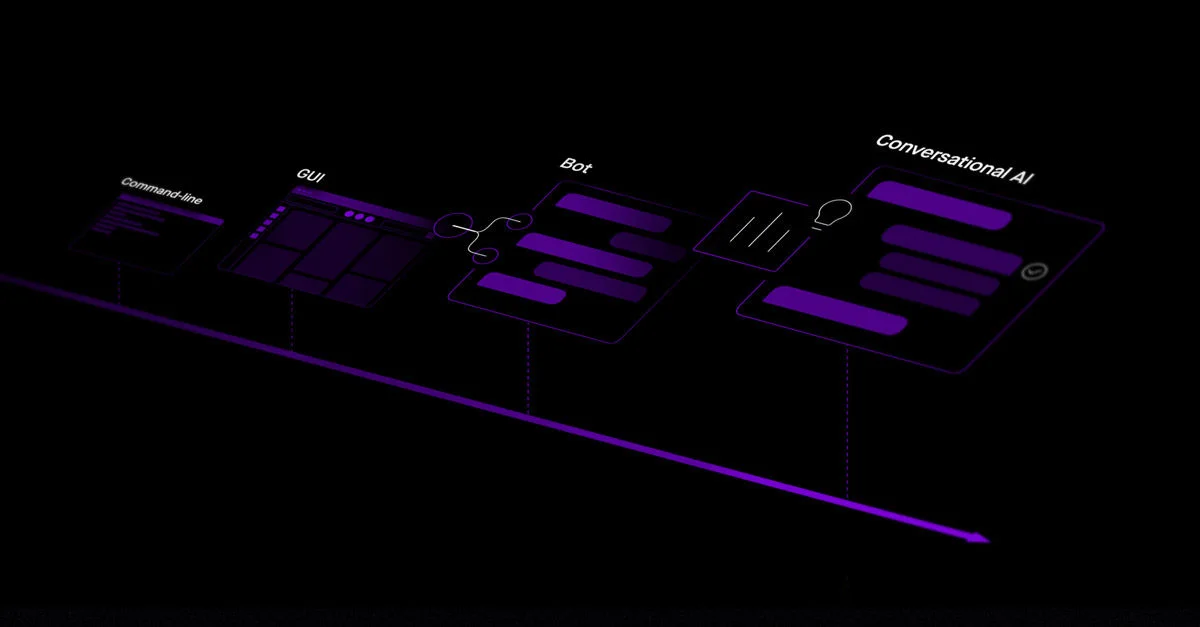

Latency affects the perceived responsiveness of an interactive AI application. High latency results in laggy or delayed responses, harming user experience. Low latency enables real-time interaction. The acceptable threshold depends on use cases — for example, conversational systems require very low latency. Overall, managing and minimizing latency is critical for usable, responsive, and accessible AI applications.

Why is low latency important?

Minimizing latency is imperative for performant, responsive AI applications. High lag between inputs and outputs cripples usability, hindering adoption of AI systems. Low latency enables snappy, real-time interactions critical for many AI applications like conversational interfaces, autonomous systems, and interactive analytics. Decreased lag improves user experience and satisfaction by eliminating frustrating delays.

Lower latency also allows complex models to be used in time-sensitive roles previously infeasible. Moreover, reduced latency unlocks new products and experiences only possible with near-instantaneous AI responses. Pursuing low latency expands the usefulness and versatility of AI by enabling seamless integration into interactive, time-critical systems.

Why does low latency matter for companies?

Low latency enables enterprise to better use AI for real-time applications like chatbots, voice interfaces, and instant recommendations that would falter with delays. Low latency also optimizes processes like fraud detection, supply chain coordination, and monitoring needing quick reactions.

Faster AI responses improve customer and employee satisfaction while expanding viable use cases. Furthermore, decreased latency provides competitive advantage by enabling more dynamic, real-time products and decisions. However, structural changes may be required to architect lower-latency systems.