- Text 1

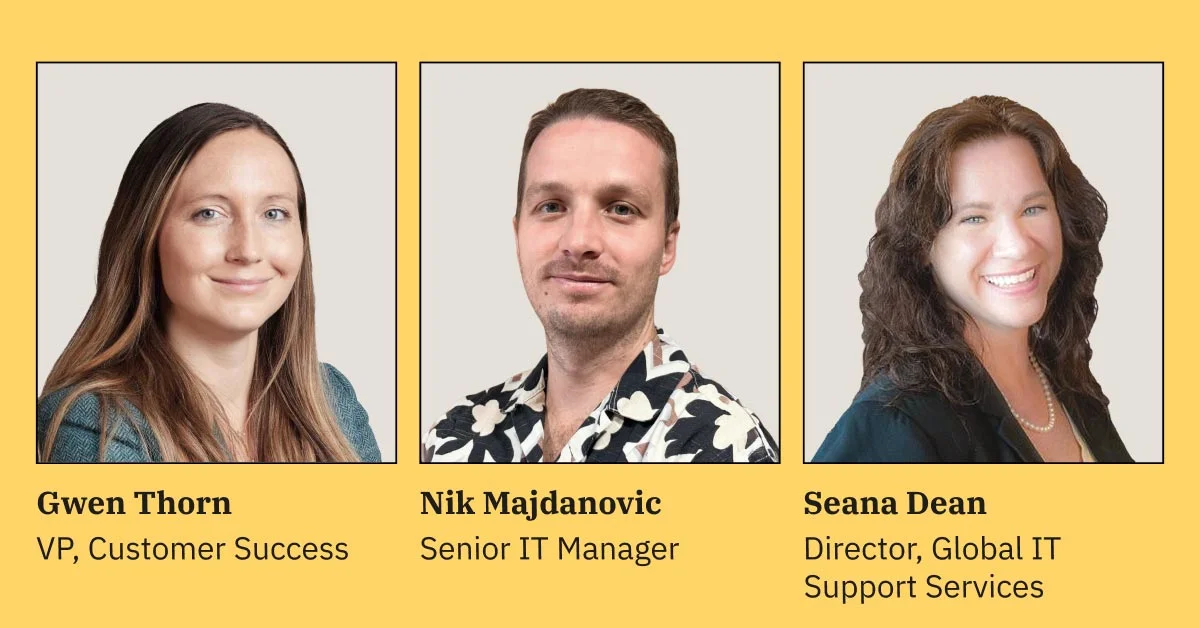

Success stories and statistics that speak for themselves

"Moveworks is an outstanding chatbot and Agentic AI software platform that allows for targeted resolution and support to the end user."

Executive Director, Enterpirise Solutions and Automation in the Healthcare and Biotech Industry gives Moveworks Platform 5/5 Rating in Gartner Peer Insights™ Artificial Intelligence Applications in IT Service Management (Transitioning to AI Applications in IT Service Management) Market. Read the full review here: https://gtnr.io/UWebMCNdK #gartnerpeerinsights

Disclaimer: Gartner® and Peer Insights™ are trademarks of Gartner, Inc. and/or its affiliates. All rights reserved. Gartner Peer Insights content consists of the opinions of individual end users based on their own experiences, and should not be construed as statements of fact, nor do they represent the views of Gartner or its affiliates. Gartner does not endorse any vendor, product or service depicted in this content nor makes any warranties, expressed or implied, with respect to this content, about its accuracy or completeness, including any warranties of merchantability or fitness for a particular purpose.

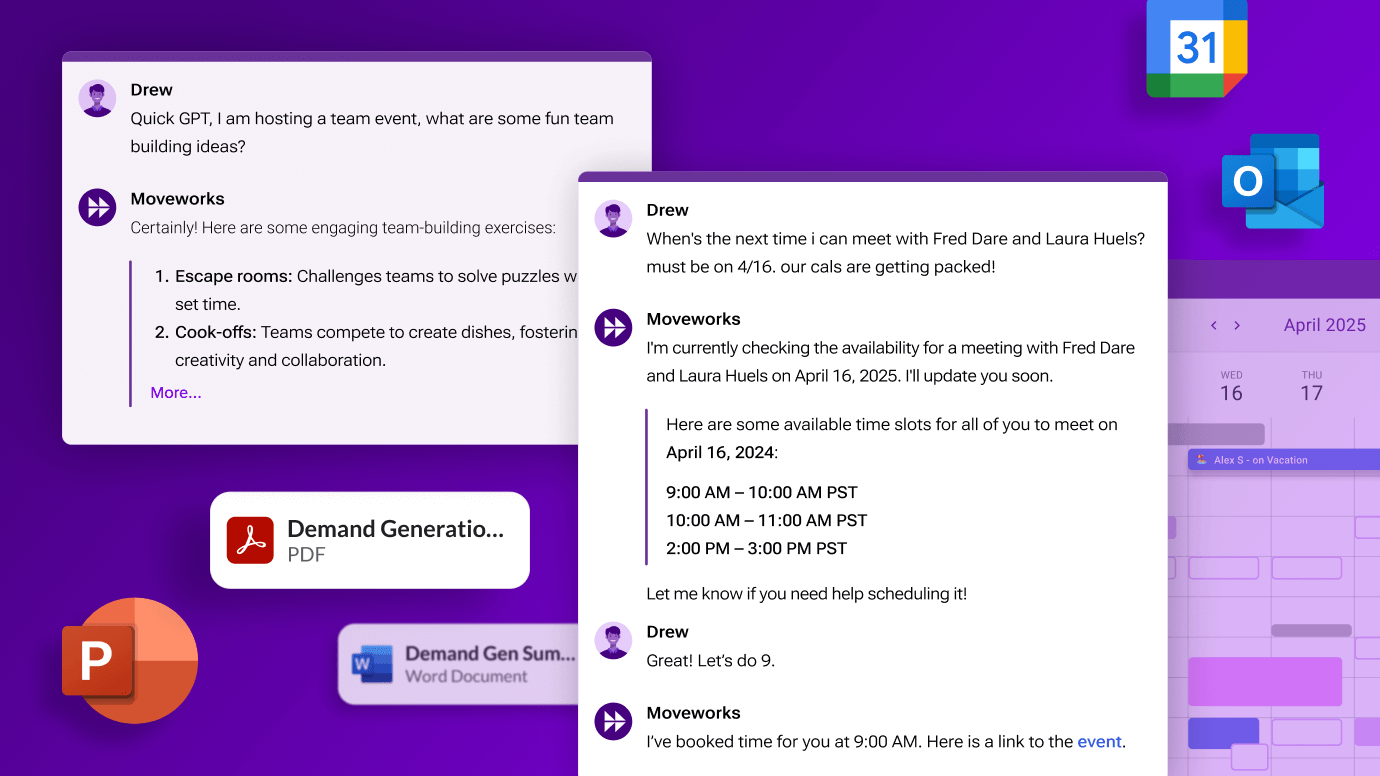

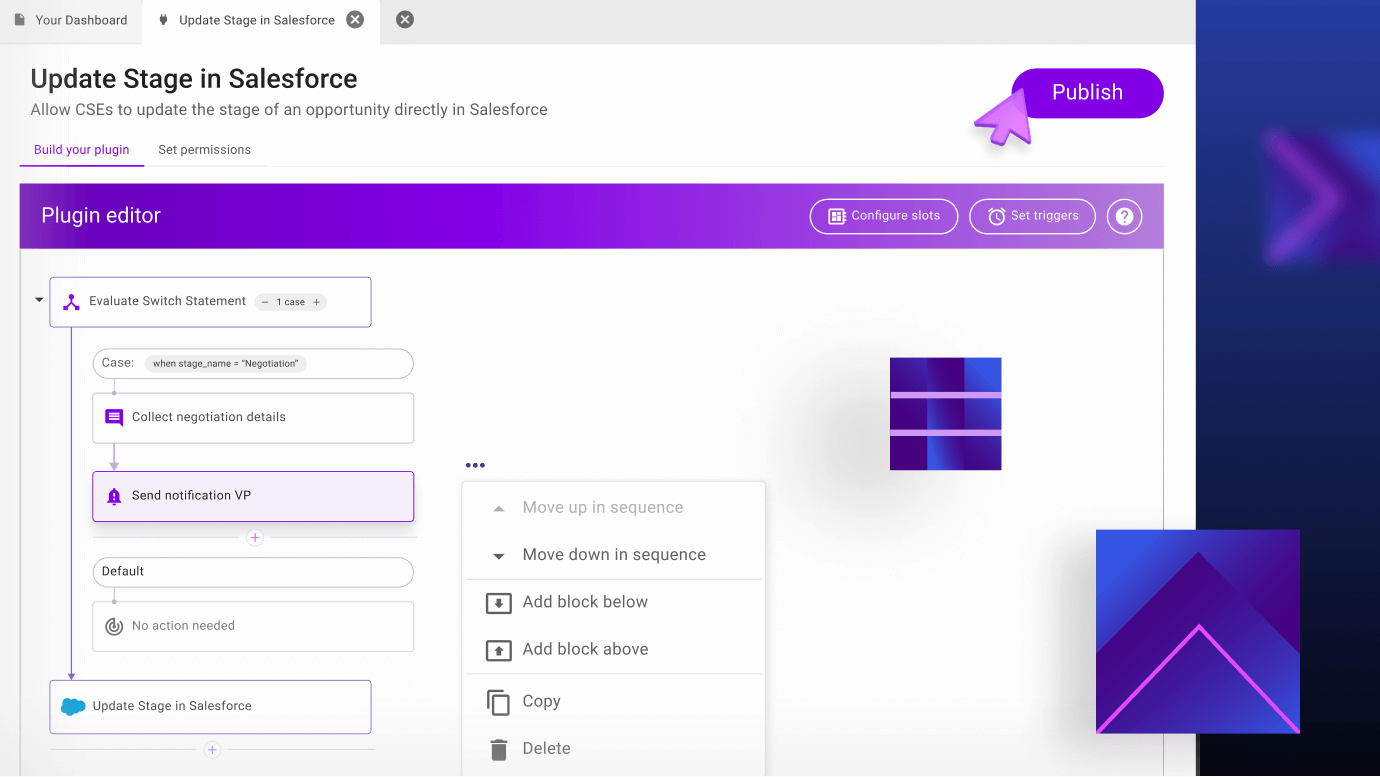

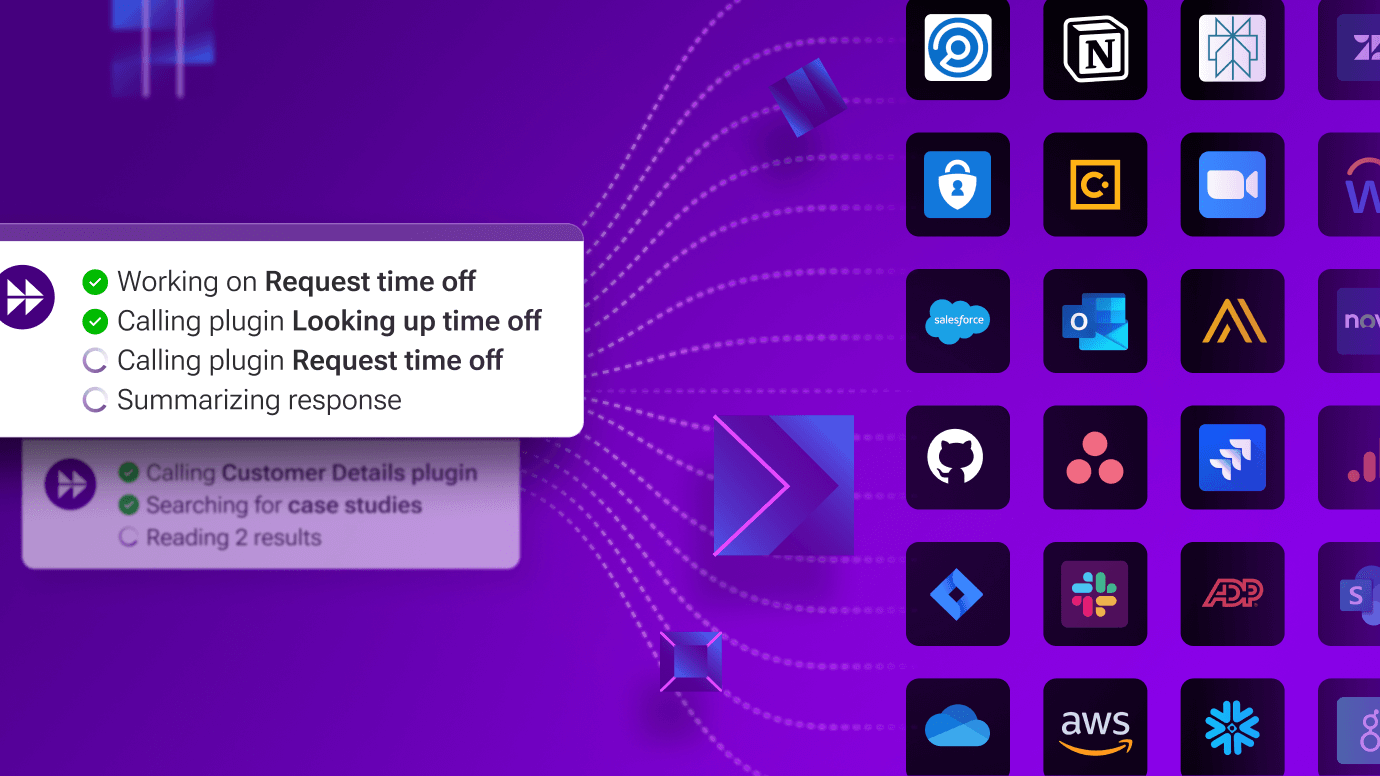

Deploy a production-grade agentic AI Assistant to all employees

Cutting-edge LLMs

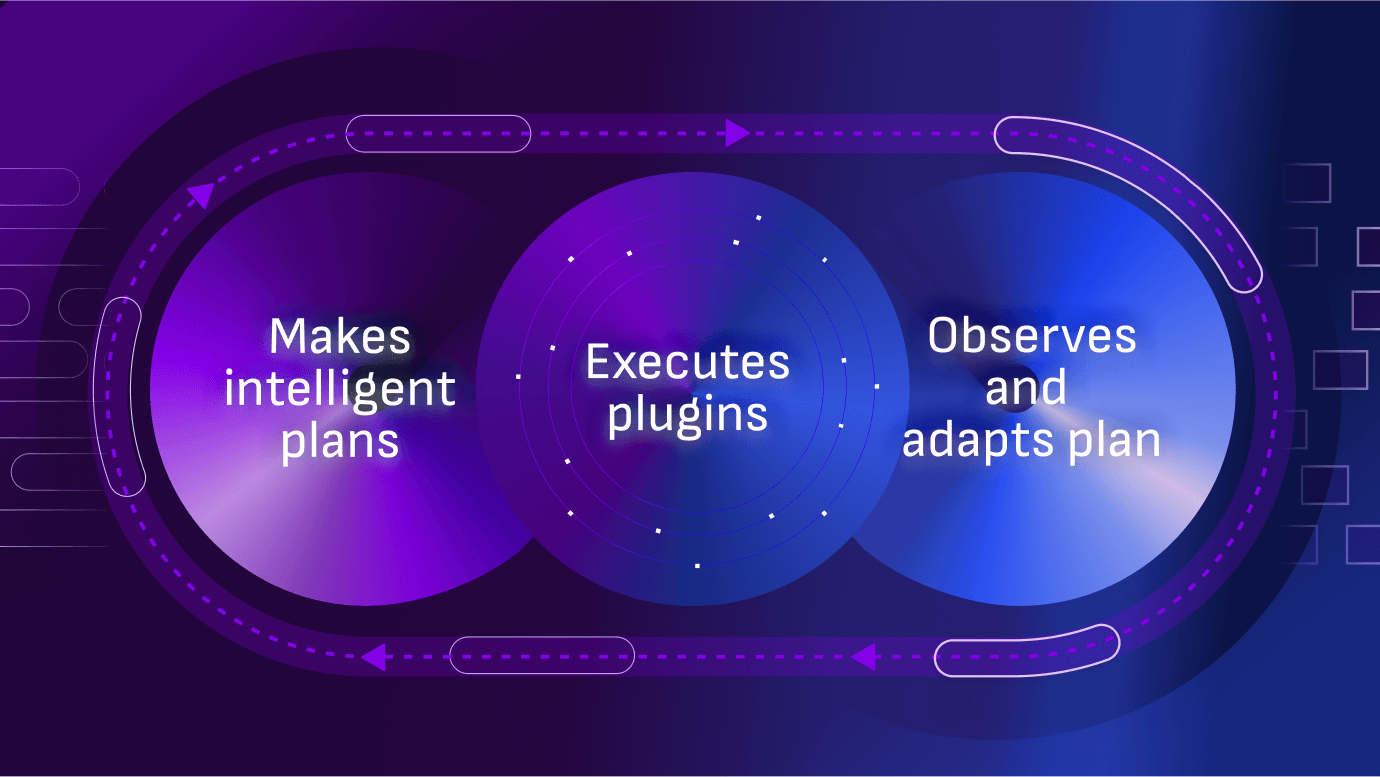

Built on the world’s most advanced LLMs from providers like Open AI, our Reasoning Engine iteratively finds the best solution to every employee issue.

Knowledge grounding

Feel confident that all responses are backed by your company’s data. Answers include citation links to source content.

Robust evaluations

Our expert annotators – human and AI – continuously measure the performance of every production model and the AI Assistant overall to guide ongoing improvement.

Fine-tuned models

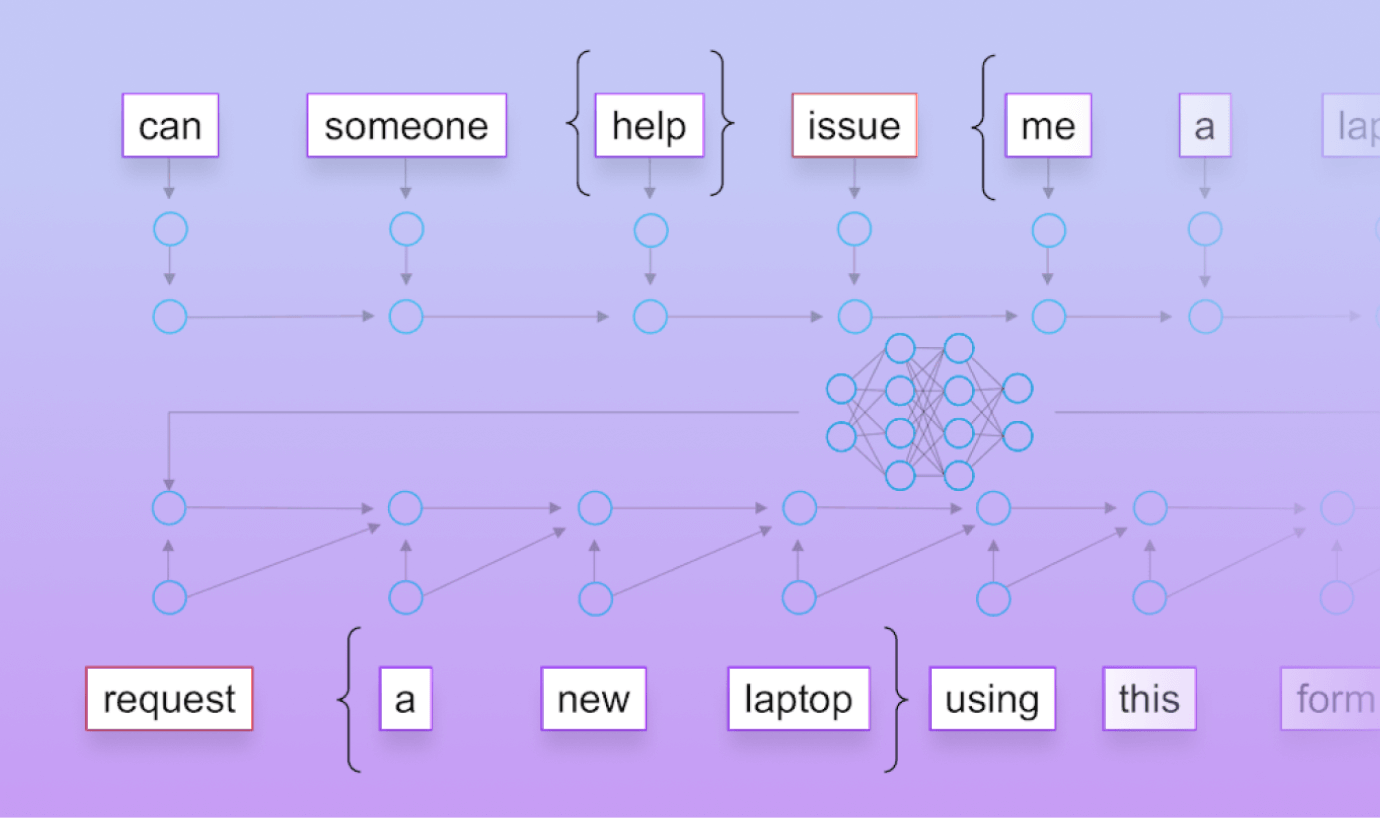

Leverage our latency-optimized in-house search and NLU models fine-tuned on proprietary data, hosted on our proprietary infrastructure for speed, scalability and seamless upgrades.

Entity grounding

Build a rich representation of your company’s acronyms and other named entities to understand Assistant queries and search results in the context of your business.

Responsible AI

We protect your brand and data security with comprehensive safety and security guardrails. Our guardrails prevent the Assistant from engaging in toxic behavior or falling for dangerous direct or indirect prompt injection attacks.