Table of contents

Over the past three decades, a handful of products like Google's search engine, Tesla’s electric vehicles, and Apple's iPhone have been the tech industry's game-changers, leaving previous products in the dust. I think we can safely add OpenAI’s ChatGPT to that list.

What puts ChatGPT on the same level as these other industry-defining innovations? There are massive announcements every year in the tech world. Engineers everywhere are constantly working to build the next big disruptive thing. What makes ChatGPT different?

ChatGPT is shaking up businesses everywhere

Let’s ask another question: What separates a game-changing tech from the status quo?

To answer, we’ll revisit a classic: The Innovator's Dilemma. In this book, author Clayton M. Christensen states that most new technologies are sustaining technologies that improve the performance of established products in ways mainstream customers traditionally value.

However, some technologies can be disruptive, offering a new value proposition. And while disruptive technologies may initially underperform established products in mainstream markets, they have other features that appeal to fringe customers, such as being cheaper, simpler, smaller, or more convenient.

To offer an example: the smartphone market. Apple originally disrupted this market by releasing the iPhone, a tool that introduced the touch screen, among other innovations — essentially launching the mobile-first era.

But, the smartphone of today has become a sustaining technology. Every year, cell phone manufacturers, including Samsung, Huawei, and Apple, release updated products to meet consumer demand. While there have been other innovations, such as folding screens, these haven’t transformed the market like the first iPhone did.

In a word: disruptive tech is practical. How a technology can be used is massively important to its success. It doesn’t matter how amazing your algorithm is if it isn’t useful or user-friendly.

To offer a second example, Google’s search engine revolutionized how we access and find information online. But this tool isn’t just helpful for someone trying to figure out whether or not Netflix streams their favorite TV show; it opened up a whole new world, playing a role in shaping both the online marketplace and the offline marketplaces. By prioritizing certain types of content and websites in the search results, the algorithm has influenced consumer behavior and the success — or failure — of businesses worldwide.

So, again: How does ChatGPT fit in? Its creator OpenAI took something previously mostly found in technical research papers, large language models (LLMs), and turned it into an accessible technology. Giving people everywhere a way to experience the power of LLMs via a simple, conversational interface revealed what’s possible to the masses. There’s no going back. Now, every product that claims to be conversational AI must offer a similarly magical experience or fall into obscurity.

Understanding the new AI stack

I doubt generative AI will stop at helping people write emails. Much like Google revolutionized the world with its search engine and Apple put a smartphone in everyone’s pocket — ChatGPT will transform how people do business.

That said — deploying GPT-class models in production is no simple task. It requires an advanced Machine Learning Ops platform, a team of human annotators to generate training data, and skilled engineers to optimize performance. Without prior experience using LLMs, it can take years to build the necessary infrastructure. However, companies with a history of using LLMs are in a good position to quickly adopt the capabilities of this tech in their products.

Suppose you’re someone who wants to incorporate conversational AI into your operations. The challenge for you is to figure out which conversational AI businesses are just hype and which are the real deal.

As more and more sales pitches from generative AI companies start filling your inbox, you need a surefire way to see through the marketing jargon and differentiate between the companies with the talent and experience to leverage this technology — and those that do not.

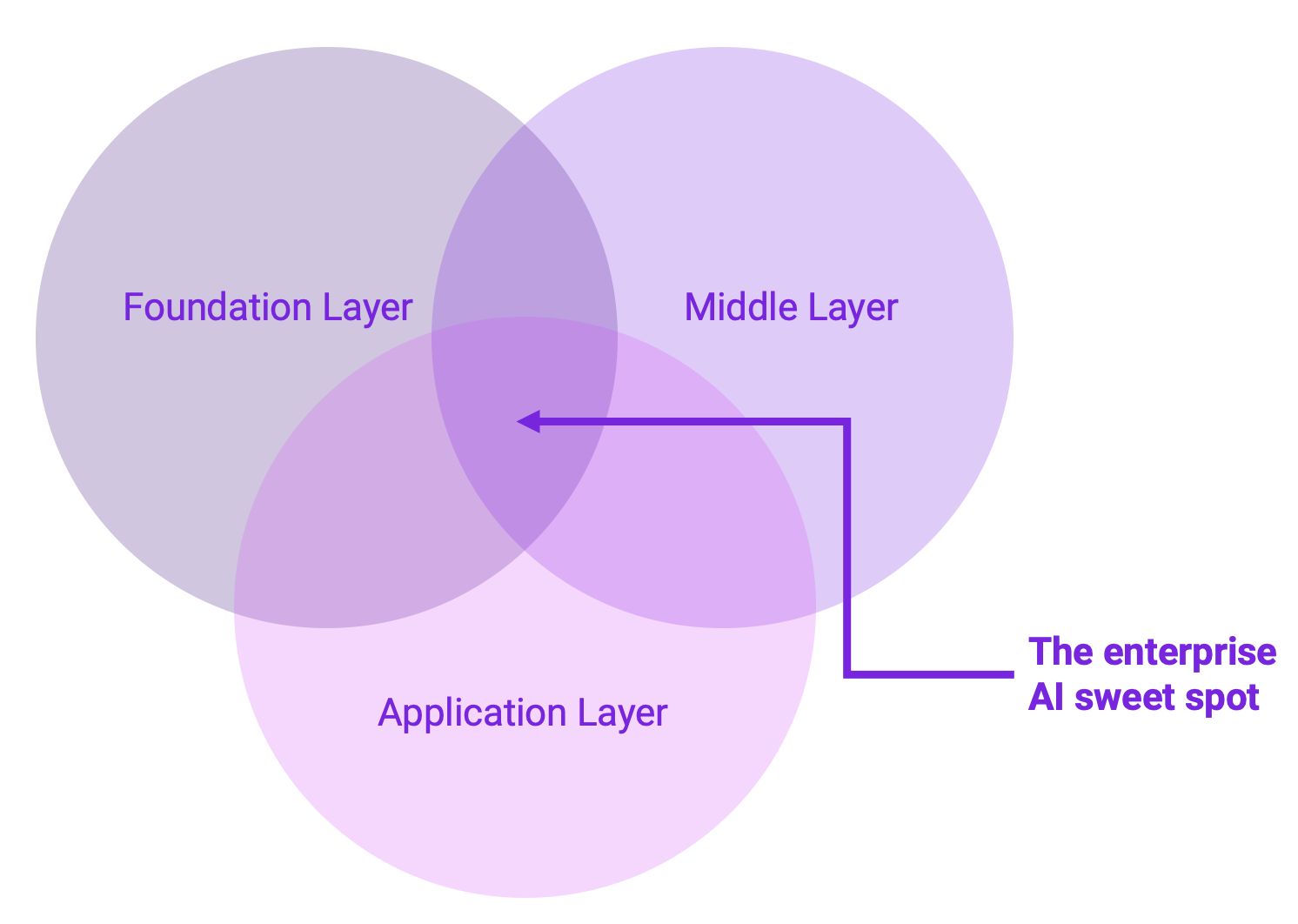

We’ll start with some basics: the conversational AI landscape can be divided into three layers: foundation, middle, and application.

Figure 1: When you’re investing in a new tool, remember: What’s hard and what’s expensive are ultimately the differentiators. A company that lies at the intersection of the foundation, middle, and application layers will be able to differentiate itself from the competition.

Figure 1: When you’re investing in a new tool, remember: What’s hard and what’s expensive are ultimately the differentiators. A company that lies at the intersection of the foundation, middle, and application layers will be able to differentiate itself from the competition.

1. The foundation layer: The base of the AI stack

ChatGPT is the latest in a long line of genuinely game-changing generative AI technologies. As good as it is, though, ChatGPT isn’t a silver bullet. It does a lot of things well — astoundingly well, even. It can serve information in tight sentences rather than long lists of blue links. It can explain concepts in ways that people can understand. And it can brainstorm business plans, term paper topics, business strategies, birthday gift suggestions, and vacation plans.

It has such a wide breadth of knowledge because it is based on a foundation AI model; specifically, an LLM called GPT-3.5. Foundation AI models make up the base of the AI stack, trained enough to offer a perspective on a wide range of topics. Products like GPT-3 for text, DALL-E-2 for images, or Whisper for voice are examples of how foundation models can be applied to deal with broad categories of outputs: text, images, videos, speech, and games.

But — there are a couple of significant challenges when using foundation models.

For one, foundation models, like GPT-3, are monoliths. Like every model, the only way to change the output is to change the input. Foundation models are frozen in time. The particular capabilities of a generative AI system depend on how it’s trained and the types of information it is given.

While you may have heard the term “prompt engineering” to describe the work people do to adjust and control model outputs of these models by inputting specific terms and structures, ultimately, their knowledge is tied to the original training data. A foundation model can’t look up dynamic data or any real-time information to tell you the current share price of Microsoft stock, for example. And they can’t create new ideas from scratch.

Perhaps more importantly, many of the big tech players have their own foundation models based on the massive amount of data they can access. Microsoft was smart to partner with OpenAI early, and they will capitalize on this investment fast. Though they aren’t leading the wave, Google’s PaLM is significantly bigger than OpenAI’s GPT-3, unlocking even more capabilities. These big cloud providers will fight to have some offerings in this space. And the smaller, newer companies don’t stand a chance.

2. The middle layer: Models powered by specialized data

While the foundation layer offers a wide breadth of understanding, it’s not enough for businesses requiring 99.9% accuracy. By definition, foundation models offer general information and are fundamentally unfinished, requiring substantial building and productizing to be turned into something useful for more nuanced work. And that’s where the middle layer — and later, the application layer — come into play.

Products that live in the middle layer build smaller models capable of taking on more precise jobs. Trained on highly detailed — and typically proprietary — data, these models can write a knowledge base article pulling on details from your IT ecosystem. Or they can re-create a writer’s style and word choice. Or they could even edit stock photos to fit your exact brand specifications.

Often developed for a particular application, industry, vertical, or use case, these more specific models outperform foundation models in their particular wheelhouses.

Here’s where — to me — things start to get interesting. Companies can differentiate themselves by taking a foundation AI model and fine-tuning it to the needs of a particular business or industry. This is particularly powerful in fields where data is highly sensitive and specific domain knowledge is required to make accurate predictions, like finance, healthcare, energy, and manufacturing.

To offer an analogy: If Facebook, Google, Microsoft, and other tech giants have their own massive and well-equipped kitchens, you won’t be able to compete by just having a recipe book. But, if you have access to a wide variety of unique and high-quality ingredients and use them to create specialized dishes that complement the menu of the big players while also incorporating human expertise and feedback — that's where the real culinary success lies. The recipe may be necessary, but the ingredients are key.

The same goes for generative AI. Ultimately it’s the data that matters. Models are children of the data they’re trained on. Companies can differentiate from the competition by incorporating the specialized data they can access. This approach results in more nuanced results and a more defensible product that’s not just a flash in the pan.

3. The application layer: A conversational user interface

The application layer is the last step that brings all these layers of models together. I’m referring to the interface where humans and machines collaborate, such as the workflow tools that make the AI models accessible in a way that enables business customers or consumer entertainment.

The application layer is crucial, especially in a post-ChatGPT world. Everyone is now expecting that magical conversational experience where anyone can write a prompt and get an answer.

The thing is that your product can’t just be an interface. Merely making API calls to other core foundation models isn’t enough to survive in such a competitive field. It may be easy to build these application layers, but they will struggle with retention and differentiation.

I've already run through at least ten different content generation free trials in the past few weeks alone, but I’m not intending to renew them. It’s clear from the steady stream of marketing emails that pressure for this type of company is already mounting. They’re now offering discounted, unlimited plans, and we’re barely seven weeks out from ChatGPT’s launch.

There are, inevitably, going to be winners in this approach, but there are going to be more losers. Think about website-building platforms. You could learn some HTML and CSS to build a website or just use Squarespace. And for every Squarespace, a hundred other web-builders didn't make it.

If a company only provides workflow tools on top of widely available technologies, it may struggle to compete with larger companies with their own versions of these tools. Is there a world where Google doesn’t release its version of ChatGPT on Google Docs? Or where Microsoft doesn’t leverage GPT-3 in its Office Suite? I don’t think so. The foundation layer is available for everyone, so it won’t be a differentiator.

Companies that can bring unique datasets, train solutions, and offer precise answers at the application and operating system layers are more likely to be successful and highly valued for their solutions. And then, the interface becomes invaluable. ChatGPT has proven the versatility of conversation, and now users have high expectations.

To be genuinely competitive, products can’t just be a thin veneer on top of existing technologies. The companies that can bring a unique dataset and find a way to productize are the ones that will really make it big time.

ChatGPT made the world pay attention to generative AI. Now, you have a rubric to see through the hype.

ChatGPT has raised the bar for conversational AI, but it’s ultimately a base capability universally available to every business in the world. If you want to write a compelling outreach email, ChatGPT and the many, many competing applications on the market are going to be extremely helpful, but if you want to, for example, run a cost-efficient support organization, you’re going to need something else with more nuanced capabilities.

This is to say that when you’re investing in a new tool, remember: What’s hard and what’s expensive are ultimately the differentiators. A product without a unique value proposition won’t survive an extremely competitive market. If a vendor is only offering an application layer on top of a foundation model — they aren’t going to make it.

That’s why you must be thoughtful about what use cases you’re trying to solve and what specialized data your prospective vendor can access. Because if you invest in the wrong direction, you will end up with a solution as obsolete as the Blackberry or Kodak.

In the near future, the next step in LLMs — GPT-4 — will be announced, and it is almost guaranteed to blow everyone’s minds again because ChatGPT made the world pay attention. This tool marked the beginning of what will become conversational AI's true potential in the enterprise. Billions of dollars are being scrambled to deploy it or similar technology into many products. Make sure your budget ends up in the right hands.

Moveworks is the leading enterprise conversational AI platform.

ChatGPT wasn’t made to help you improve the employee experience; Moveworks was.

We’re focused on building the world’s leading conversational AI platform and have been for the last six years. We’re constantly innovating, plugged into the latest advances in the field, and looking for ways to improve our platform.

Today, we offer what I — and our customers — truly believe to be the best conversational AI platform for employee experience:

- We understand exactly what employees need, no matter which language they use.

- We offer actionable recommendations for your support environment.

- You can implement our platform in days, not weeks or months.

No matter your industry, conversational AI from Moveworks can elevate your employee experience, improving every interaction throughout their journey. Don’t just take our word for it — leading companies like Hearst and Palo Alto Networks have experienced incredible results with our platform.

Let us show you all you can get from conversational AI in a quick demo with our team.

Contact Moveworks to learn how AI can supercharge your workforce's productivity.